High-Speed-Digital Receiver Testing

Ever wondered how you can plug almost any HDMI device into your TV and it just works? Or how USB sticks connect seamlessly (after flipping them twice, of course)? A big reason is that both the transmitting and receiving sides follow strict standards — not just in terms of protocol, but also the physical signaling and cabling. These standards define exactly how bits are placed onto the wire and how they’re interpreted on the other end.

But during development, how do engineers ensure that devices actually comply with these standards?

Transmitter vs Receiver

Testing transmitters is relatively straightforward: you connect them to high-end measurement gear — oscilloscopes, bit error rate testers, spectrum analyzers — and verify that their signals meet the standard’s physical layer specifications. You measure noise, jitter, amplitudes, rise and fall times, and capture eye diagrams to confirm signal integrity.

Testing receivers, however, is a different challenge entirely. You need to:

- Speak the correct protocol to the device under test.

- Generate precisely controlled, stressed signals with programmable amounts of jitter, noise, and amplitude distortion to probe the receiver’s tolerance.

- Calibrate the entire measurement setup (cables, connectors, fixtures) so the signals at the device pins match the intended stress profile.

- Read out internal status registers to assess how the device reacts under these conditions.

- Automate large sets of compliance test cases to ensure consistent and repeatable results.

Traditionally, this was a labor-intensive and error-prone process. Every test case required careful setup and manual coordination between different pieces of lab equipment.

Scope & Standards

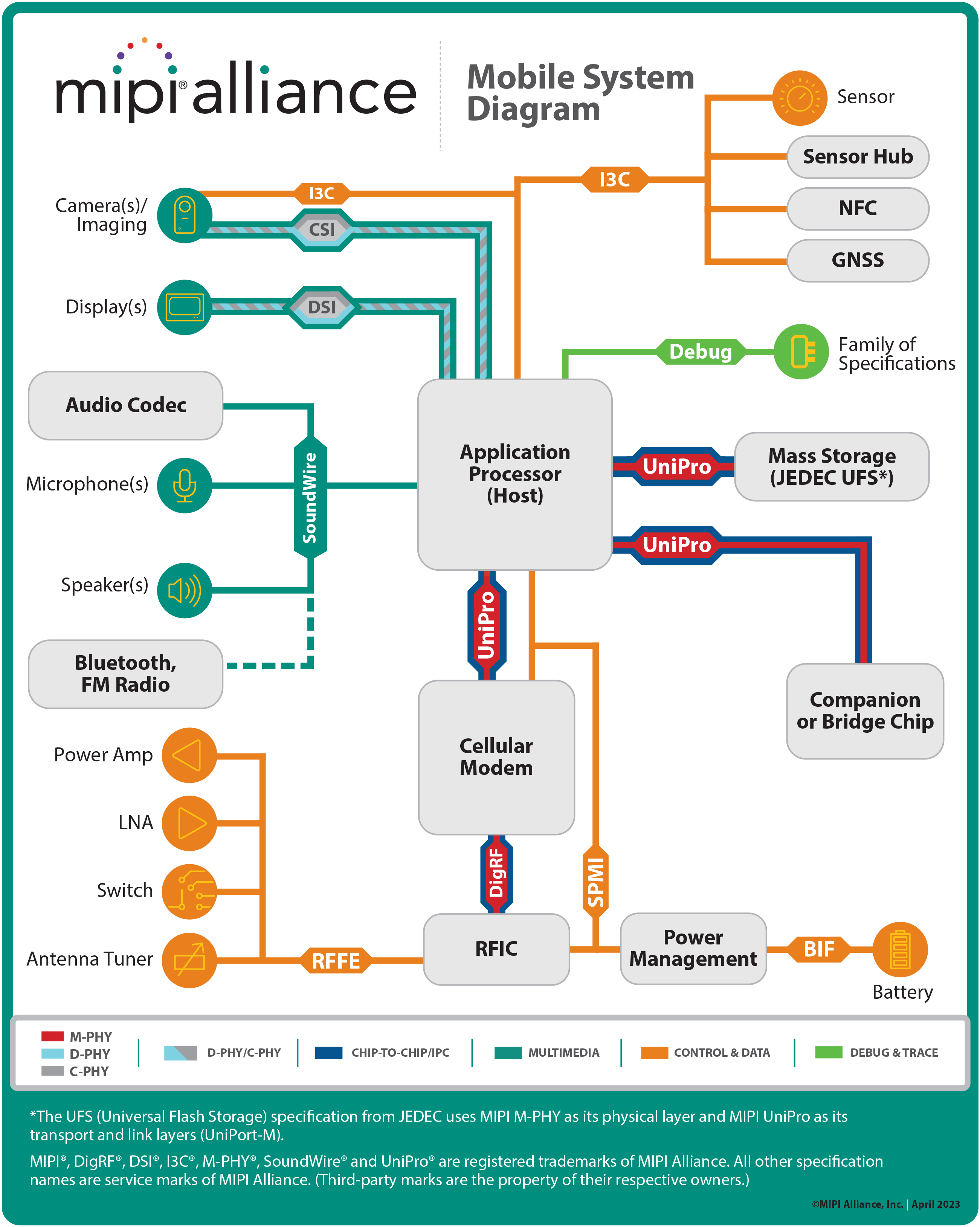

During my time at BitifEye, I worked on automated pre-compliance testing for high-speed digital physical layer receiver tests, focusing primarily on mobile standards like MIPI M-PHY (inter-processor and memory interfaces) and MIPI D-PHY (used for displays), using protocols like DSI-2, CSI-2, and UniPro. BitifEye’s portfolio covered essentially all major high-speed standards — USB, HDMI, PCIe, DisplayPort, and more — and the company was deeply involved in defining those standards to ensure that they were actually testable in practice.

Automation & Instrument Control

My work involved directly programming and controlling test equipment — oscilloscopes, signal generators, and protocol analyzers — through standardized remote control interfaces (e.g., SCPI). I implemented automated test sequences that configured the instruments, calibrated the signal paths, generated stressed waveforms, and performed detailed measurements such as eye diagram acquisition and jitter analysis, all in accordance with official compliance specifications. This significantly reduced manual overhead and improved reproducibility across test runs.

Mobile Environment Constraints

Another aspect I found particularly interesting was the environment in which these mobile standards operate. Devices often run on batteries, so they must tolerate noisy and limited power supplies, while still supporting multi-gigabit data rates to communicate with peripherals. This creates tight design margins and unique signal integrity challenges. In many cases, we designed custom test fixtures to bridge delicate mobile interfaces with lab equipment without compromising signal fidelity.

Standards Collaboration & Interoperability

Beyond lab automation, I regularly participated in MIPI Face-to-Face meetings — conference-style gatherings and workshops where working groups defined, refined, and tested the standards collaboratively. These included interoperability plug sessions where devices from different vendors were connected on the spot to assess real-world interoperability. It was a rare chance to see the full ecosystem come together — silicon vendors, IP providers, test equipment companies, and device manufacturers — and directly influence how standards evolved to ensure they remained both technically rigorous and practically testable.