Projects

I build AI systems that bridge research and production deployment—from generative models for 3D reconstruction to multi-sensor perception systems processing high-volume data in real-time. My work spans the full stack: deep learning architectures, classical computer vision, hardware design (FPGA/ASIC), and production system optimization.

Projects range from published IEEE research (diffusion models, state space models) to production-scale perception systems deployed globally. Each demonstrates technical depth, systems thinking, and the ability to translate cutting-edge AI into real-world solutions under real-time constraints.

Multi-Sensor 360° Data Collection and Ground Truth Generation for Autonomous Driving

High‑quality labeled and unlabeled data is still the limiting resource in autonomous driving. A vehicle has to perceive not only “what is where” now, but how objects are moving and how rare edge cases look under different weather, lighting, and traffic patterns. Ground truth is the reference we use to train models, benchmark perception quality, validate safety cases, seed simulation, and evaluate domain shift when the stack or environment changes. A single modality or manual labeling approach cannot scale to the fidelity required.

Free View Point Video (FVV)

Free‑viewpoint video (FVV) lets a viewer move virtually through a captured real scene as if a camera could be placed anywhere—pausing, orbiting, diving in—rather than being limited to the fixed physical cameras. It changes how live events, performances, training sessions or remote collaboration can be experienced: the viewer chooses the path. Achieving that freedom with real‑time responsiveness and reasonable cost is far from trivial.

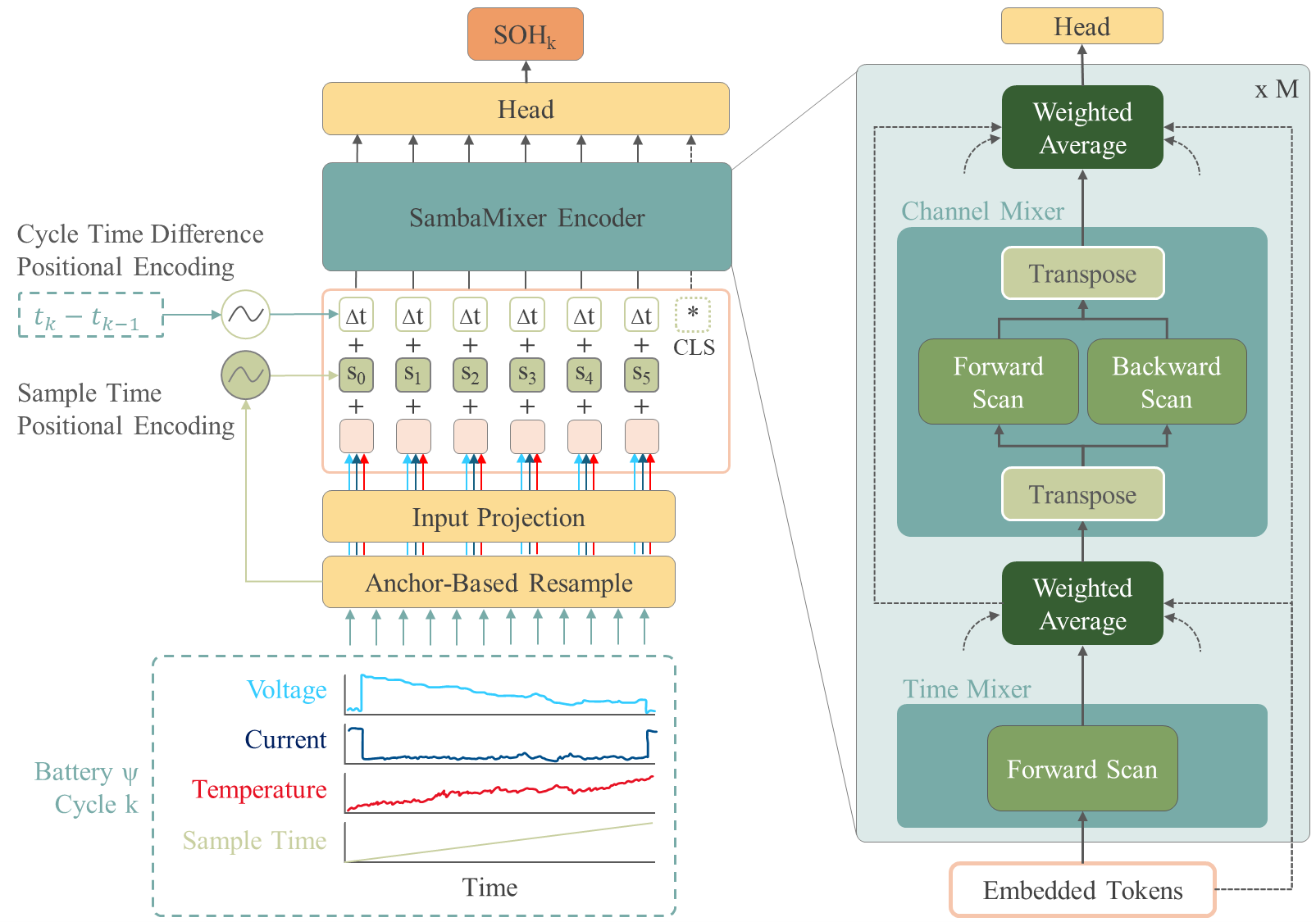

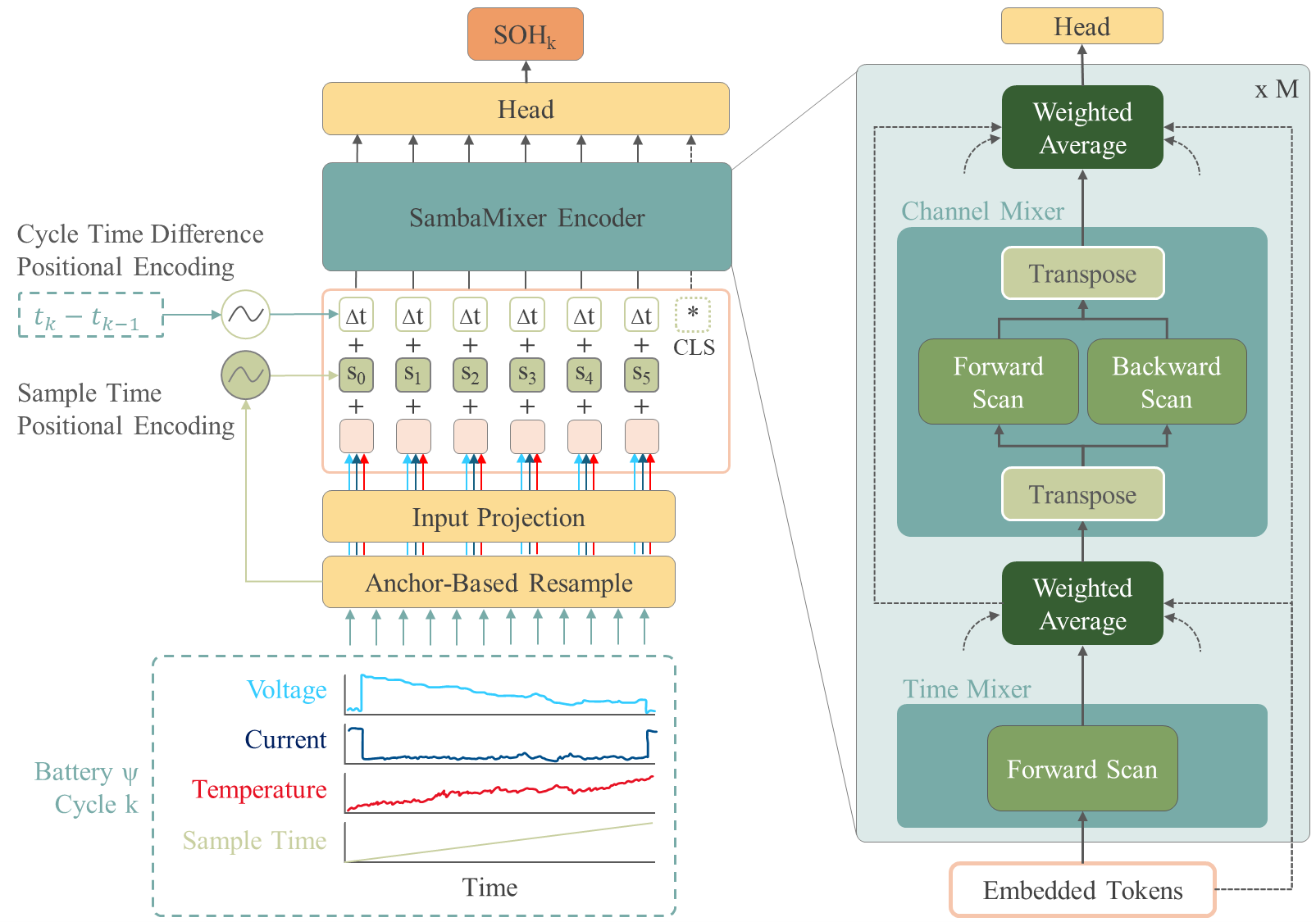

SambaMixer - State of Health Prediction of Li-ion Batteries using Mamba State Space Models

The state of health (SOH) of a Li-ion battery is determined by complex interactions among its internal components and external factors. Approaches leveraging deep learning architectures have been proposed to predict the SOH using convolutional networks, recurrent networks, and transformers. Recently, Mamba selective state space models have emerged as a new sequence model that combines fast parallel training with data efficiency and fast sampling. In this paper, we propose SambaMixer, a Mamba-based model for predicting the SOH of Li-ion batteries using multivariate time signals measured during the battery’s discharge cycle. Our model is designed to handle analog signals with irregular sampling rates and recuperation effects of Li-ion batteries. We introduce a novel anchor-based resampling method as an augmentation technique. Additionally, we improve performance and learn recuperation effects by conditioning the prediction on the sample time and cycle time difference using positional encodings. We evaluate our model on the NASA battery discharge dataset, reporting MAE, RMSE, and MAPE. Our model outperforms previous methods based on CNNs and recurrent networks, reducing MAE by 52%, RMSE by 43%, and MAPE by 7%.

IEEE HKN Talks

HKN Talks is a series created and hosted by IEEE Eta Kappa Nu Nu Alpha. We invite experts from the industry and academia to give talks on IEEE related topics. We publish the talks on our YouTube Channel to make them publically available for everyone.

DeepSaki TensorFlow Add-On

DeepSaki is an add-on to TensorFlow. It provides a variaty of custom classes ranging from activation functions to entire models, helper functions to facilitate connectiong to your compute HW and many more!

The project started as fun project to learn and to collect the code snippets I was using in my projects. Now it has been transformed into a modern SW package featuring CI/CD and a documentation.

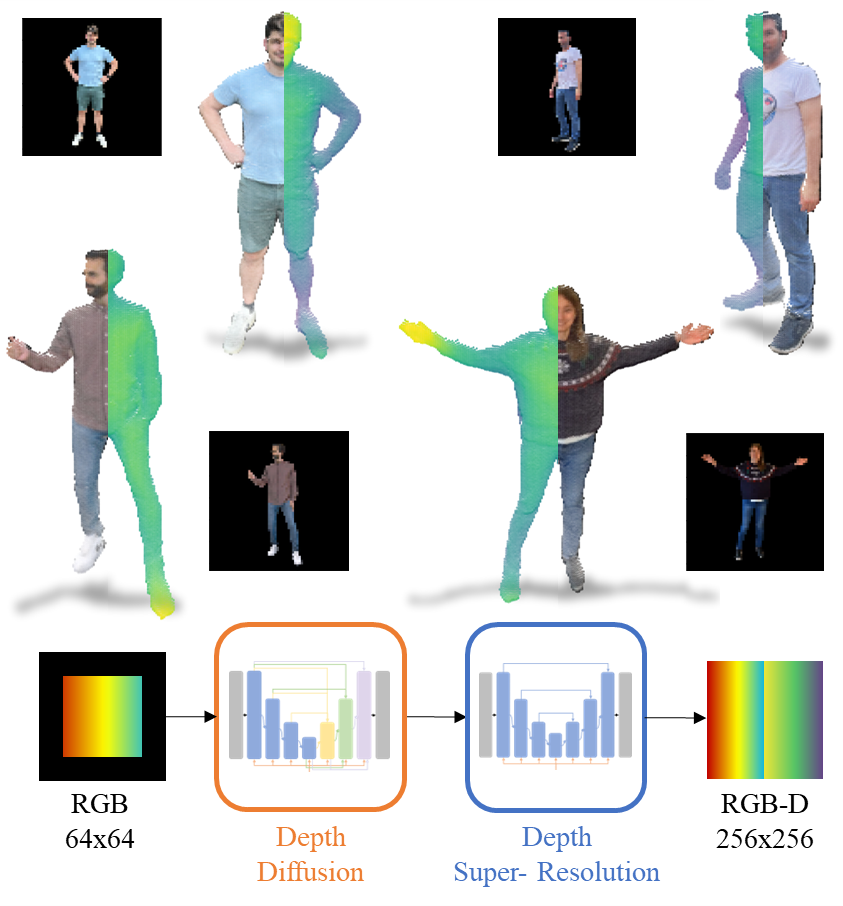

RGB-D-Fusion - Image Conditioned Depth Diffusion of Humanoid Subjects

We present RGB-D-Fusion, a multi-modal conditional denoising diffusion probabilistic model to generate high resolution depth maps from low-resolution monocular RGB images of humanoid subjects. RGB-D-Fusion first generates a low-resolution depth map using an image conditioned denoising diffusion probabilistic model and then upsamples the depth map using a second denoising diffusion probabilistic model conditioned on a low-resolution RGB-D image. We further introduce a novel augmentation technique, depth noise augmentation, to increase the robustness of our super-resolution model.

Automotive Radar Electronics

Automotive radar quietly solves problems that cameras, lidar and ultrasonic sensors cannot handle alone: seeing and measuring motion in rain, fog, snow, glare and darkness, at long range, with direct velocity information. It underpins Adaptive Cruise Control, Emergency Braking, Blind Spot detection, cross‑traffic alerts, and feeds higher‑level fusion in driver assistance stacks.

Adversarial Domain Adaptation of Synthetic 3D Data to Train a Volumetric Video Generator Model

In cooperation with Volograms we synthetically generated

training data from an existing (similar but different) data distribution by performing adversarial domain adaptation using an improved CycleGAN

to train a single-view reconstruction model for VR/AR applications.

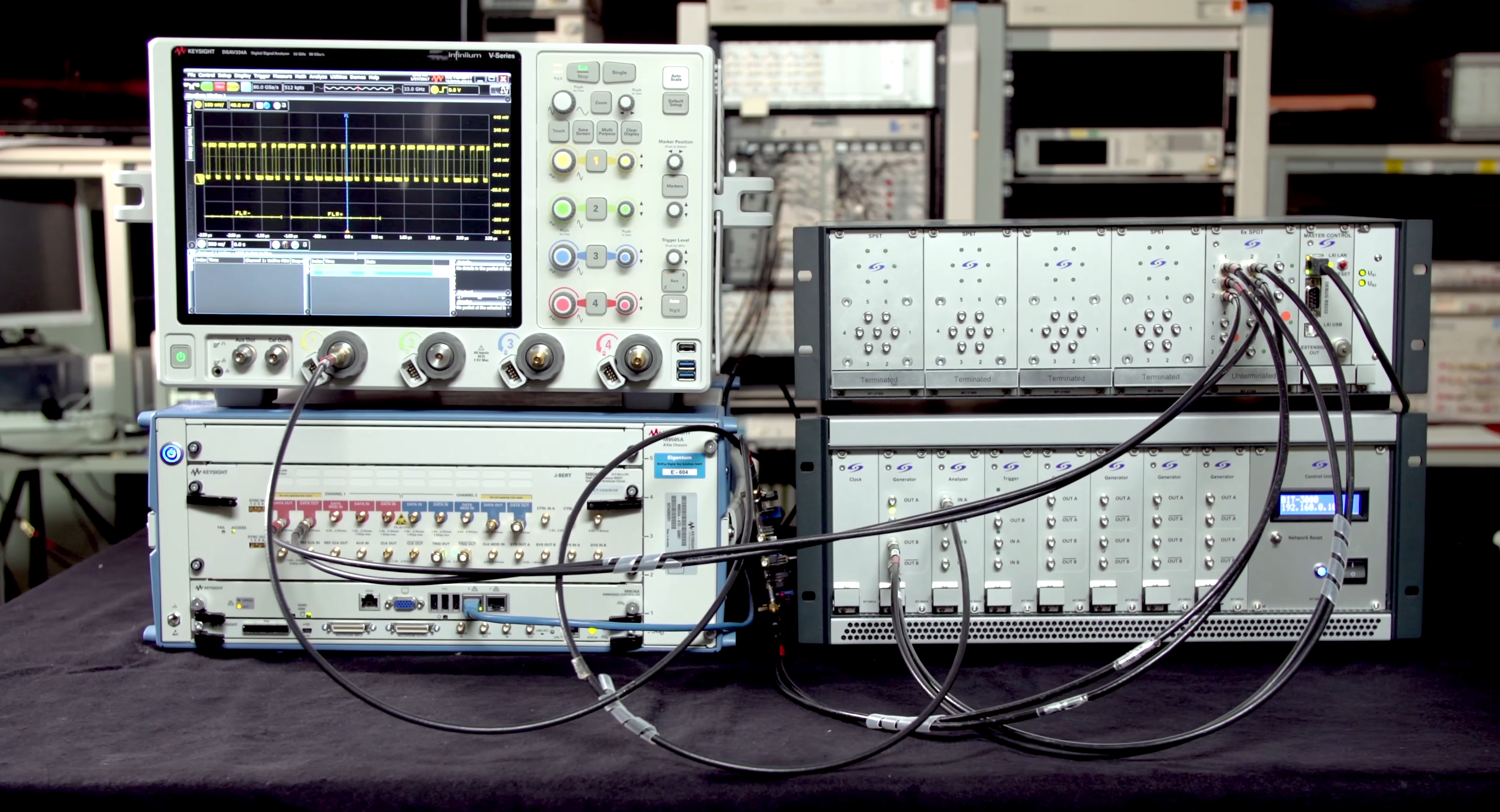

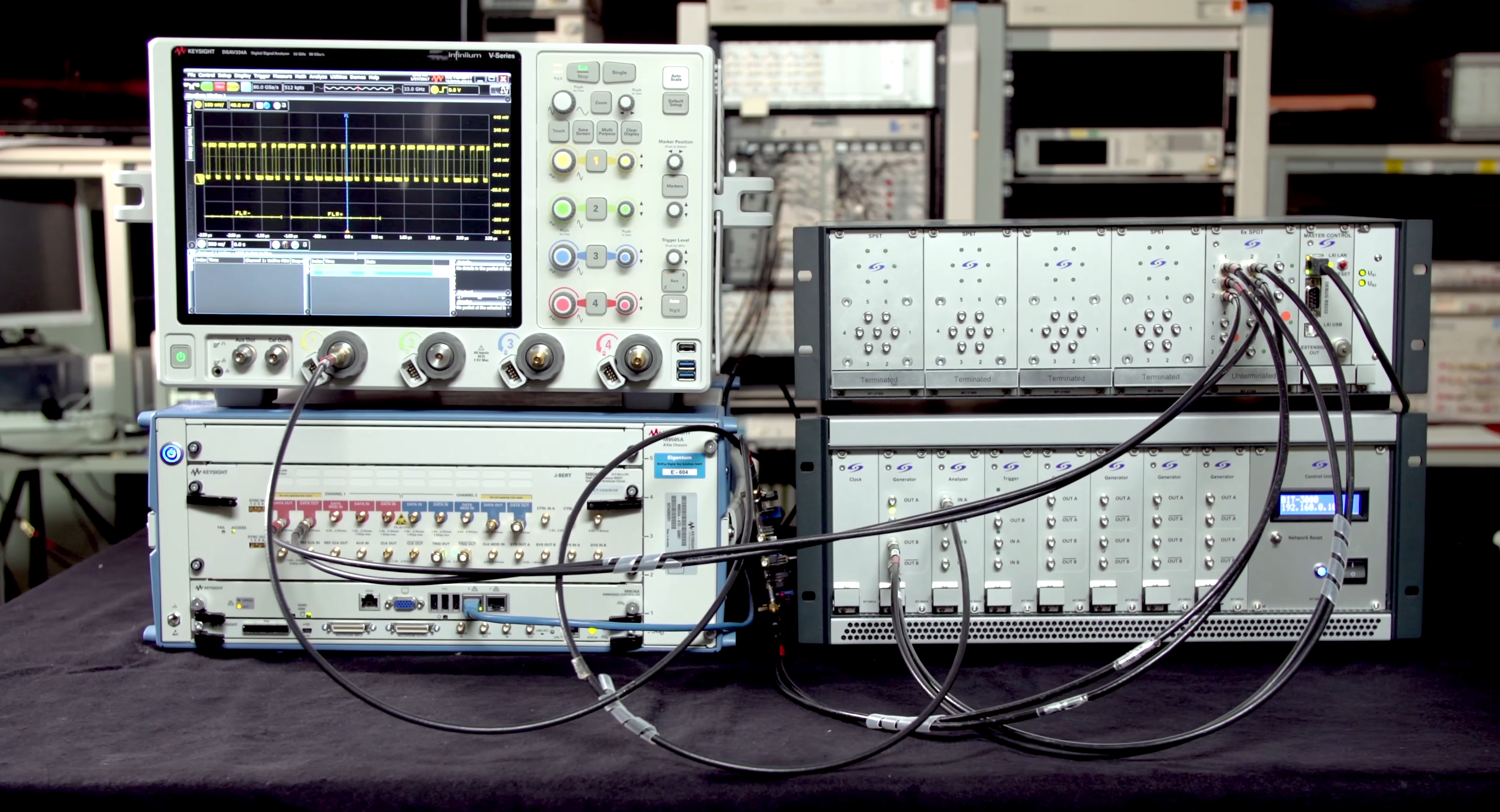

Dynamic Sequencing Generator and Analyzer (DSGA) and Automated High-Bandwidth Switch System

Receiver tests in the lab are often too “ideal”. Real silicon does not sit forever in a steady high‑speed lane sending a single pattern. It flips between high‑speed bursts and low‑power states, exchanges sideband control, reconfigures lanes, reports counters and status, then dives back into data again. Two hardware pieces made those real behaviors testable in an automated way: a Dynamic Sequencing Generator & Analyzer (DSGA) and a modular high‑bandwidth switching system. Classic AWGs (Arbitrary Waveform Generators) and BERTs (Bit Error Rate Testers) are great at stressed data (jittered PRBS, de‑emphasis, ISI). They are not built to script low‑speed protocol “glue” or to read device feedback while stress is applied. The DSGA plus switching closes that gap.

High-Speed-Digital Receiver Testing

Ever wondered how you can plug almost any HDMI device into your TV and it just works? Or how USB sticks connect seamlessly (after flipping them twice, of course)? A big reason is that both the transmitting and receiving sides follow strict standards — not just in terms of protocol, but also the physical signaling and cabling. These standards define exactly how bits are placed onto the wire and how they’re interpreted on the other end.